Khiem Pham

I am currently a Research Resident at Vinai Research working with Dr. Nhat Ho and Dr. Hung Bui. I am broadly interested in Statistical Machine Learning and especially scalable algorithms

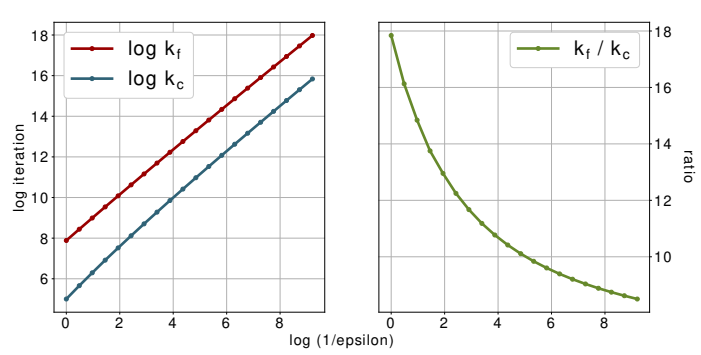

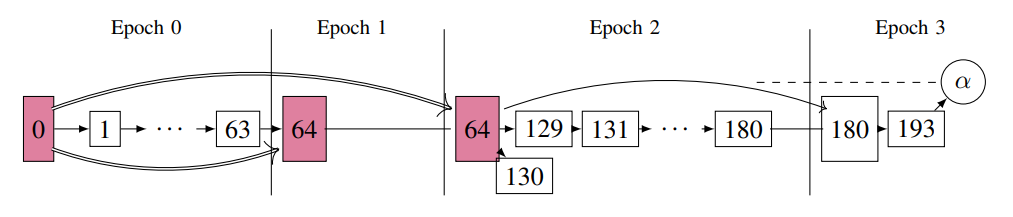

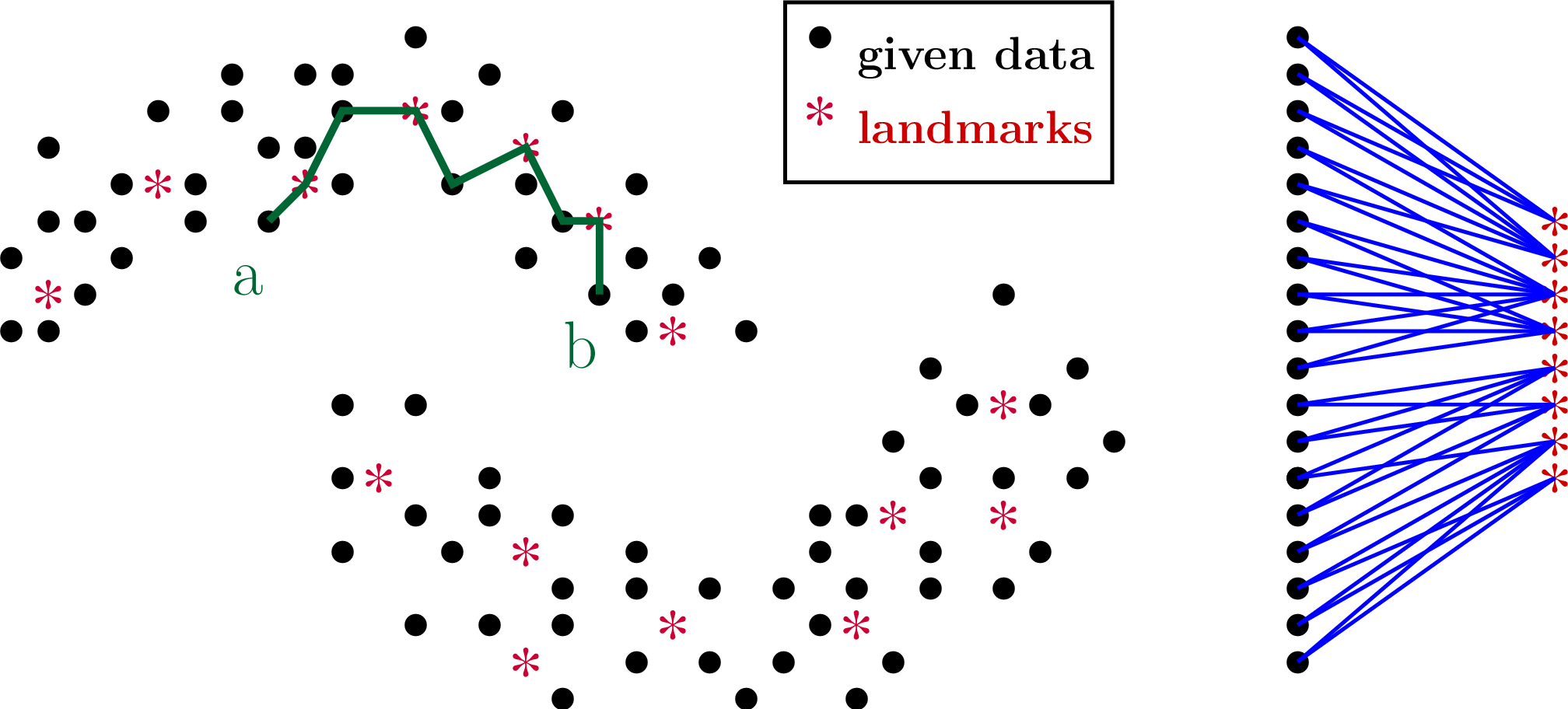

Currently my main interest is Optimal Transport Theory (sample complexity theory, scalibility and Machine Learning applications). My secondary interests include (Deep) Probabilistic Modeling and Approximate Inference and Deep Representation Learning. Lately, I have also been playing with Binary Neural Networks.